News

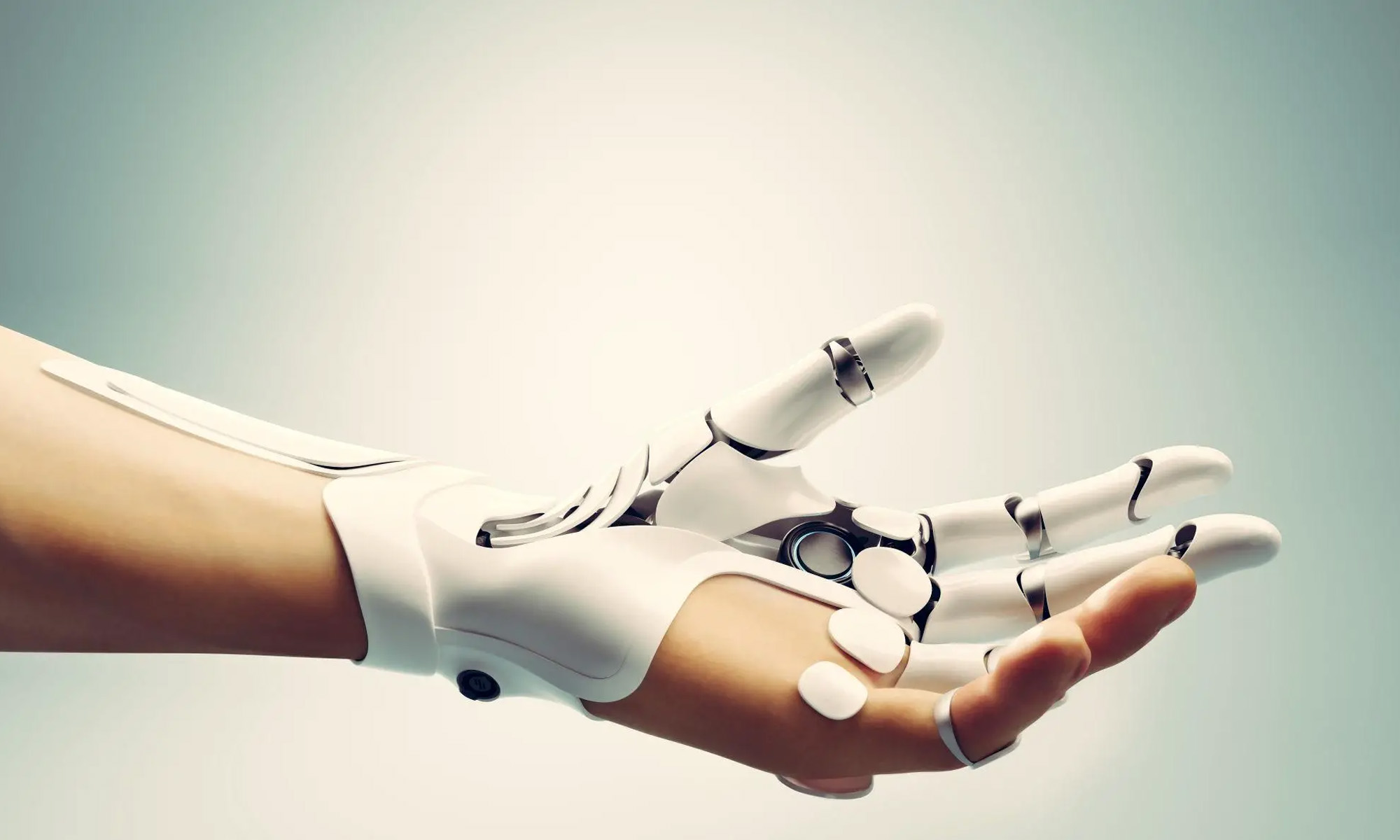

New Artificial Skin For Robots Allows Them To Feel Things

A groundbreaking new development from a Caltech researcher means that robots will soon be able to “feel” their surroundings, with sensations relayed back to human operators.

Caltech assistant professor of medical engineering, Wei Gao, has developed a new platform for robots and their operators known as M-Bot. When it hits the mainstream, the technology will allow humans to control robots more precisely and help protect them in hostile environments.

The platform is based around an artificial skin that effectively gives robots a sense of touch. The newly developed tool also uses machine learning and forearm sensors to allow human users to control robots with their own movements while receiving delicate haptic feedback through their skin.

The synthetic skin is composed of a gelatinous hydrogel and makes robot fingertips function much like our own. Inside the gel, layers of tiny micrometer sensors — applied similarly to Inkjet printing — detect and report touch through very gentle electrical stimulation. For example, if a robotic hand picked up an egg too firmly, the artificial skin sensors would give feedback to the human operator on the sensation of the shell being crushed.

Also Read: Futuristic Electric Self-Driving Trucks Are Coming To The UAE

Wei Gao and his Caltech team hope the system will eventually find applications in everything from agriculture and environmental protection to security. The developers also note that robot operators will be able to “feel” their surroundings, including sensing how much fertilizer or pesticide is being applied to crops or whether suspicious bags contain traces of explosives.

Abdulmotaleb El Saddik, Professor of Computer Vision at Mohamed bin Zayed University of Artificial Intelligence, has noted that the new development offers even more applications and possibilities: “The ability to physically feel the touch, including handshakes and shoulder patting, could contribute to creating a sense of connection and empathy, enhancing the quality of interactions, particularly for the elderly and people living at a distance or those who are in space [such as] astronauts connecting with their family and children”.

News

Samsung Smart Glasses Teased For January, Software Reveal Imminent

According to Korean sources, the new wearable will launch alongside the Galaxy S25, with the accompanying software platform unveiled this December.

Samsung appears poised to introduce its highly anticipated smart glasses in January 2025, alongside the launch of the Galaxy S25. According to sources in Korea, the company will first reveal the accompanying software platform later this month.

As per a report from Yonhap News, Samsung’s unveiling strategy for the smart glasses echoes its approach with the Galaxy Ring earlier this year. The January showcase won’t constitute a full product launch but will likely feature teaser visuals at the Galaxy S25 event. A more detailed rollout could follow in subsequent months.

Just in: Samsung is set to unveil a prototype of its augmented reality (AR) glasses, currently in development, during the Galaxy S25 Unpacked event early next year, likely in the form of videos or images.

Additionally, prior to revealing the prototype, Samsung plans to introduce…

— Jukanlosreve (@Jukanlosreve) December 3, 2024

The Galaxy Ring, for example, debuted in January via a short presentation during Samsung’s Unpacked event. The full product unveiling came later at MWC in February, and the final release followed in July. Samsung seems to be adopting a similar phased approach with its smart glasses, which are expected to hit the market in the third quarter of 2025.

A Collaborative Software Effort

Samsung’s partnership with Google has played a key role in developing the smart glasses’ software. This collaboration was first announced in February 2023, with the device set to run on an Android-based platform. In July, the companies reiterated their plans to deliver an extended reality (XR) platform by the end of the year. The software specifics for the XR device are expected to be unveiled before the end of December.

Reports suggest that the smart glasses will resemble Ray-Ban Meta smart glasses in functionality. They won’t include a display but will weigh approximately 50 grams, emphasizing a lightweight, user-friendly design.

Feature Set And Compatibility

The glasses are rumored to integrate Google’s Gemini technology, alongside features like gesture recognition and potential payment capabilities. Samsung aims to create a seamless user experience by integrating the glasses with its broader Galaxy ecosystem, starting with the Galaxy S25, slated for release on January 22.